Multi-persona LLM

A decision-support system with LLM-generated debates | Oct 2024

HIGHLIGHTS

RESEARCH QUESTION

Large language models (LLMs) are enabling designers to give life to exciting new user experiences for information access. In this project, we aim to help users to explore a novel information seeking experience with the support of LLM: letting users launch a debate on any topic of interest in which generated LLM personas present diverse perspectives on the topic.

We want to explore the active and autonomous nature of LLM agents in this setting as they present information and arguments, creating an argumentative experience between humans and AI. In this project, we designed a system that generates LLM personas to debate a topic of interest from different perspectives. In focusing the user experience on exposure to diverse perspectives, we particularly investigated whether exposure to diverse information via generated personas could help reduce user confirmation bias. Research questions are as follows:

What is the overall user experience when conceptualizing controversial topics through a multi-persona debate

interaction simulated by LLM?

Compared to the traditional retrieval-based system, how effective is the multi-persona debate system in mitigating confirmation bias?

Compared to the retrieval-based system, how does the multi-persona debate system finally influence user

pre-existing beliefs?

SYSTEM DESIGN

Controversial social issues, such as gun control, abortion, climate change, etc., often attract heightened media attention and public interest, where partisanship and political divisions can influence adopted stances. This suggests value in exploring new ways by which people might navigate complex and nuanced issues of divergent positions and ideologies. Users are curious and interested to explore diverse views. Someone particularly firm their own beliefs may be curious about how anyone else could think otherwise.

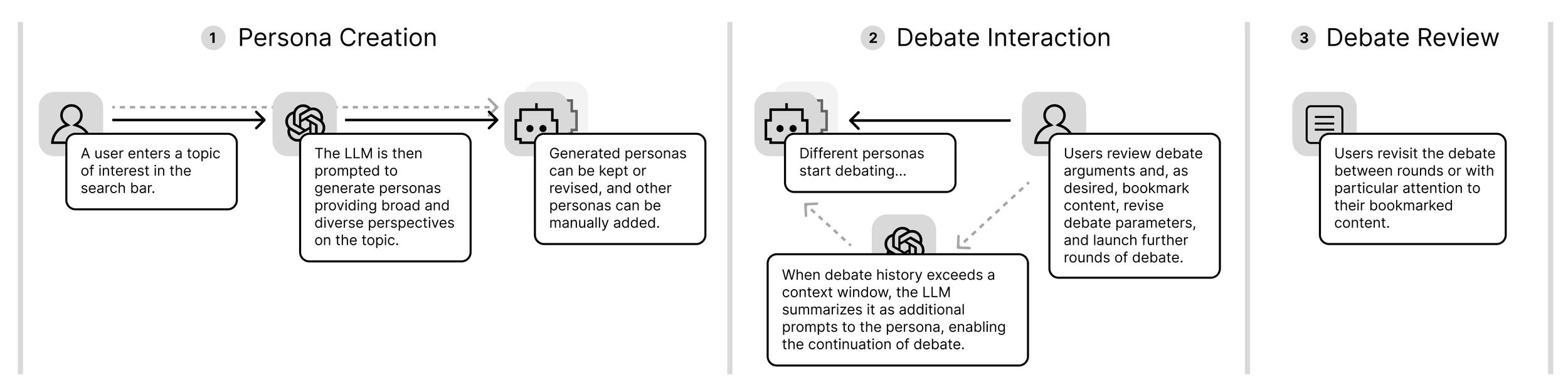

Our multi-persona debate system offers a novel form of information access for such topics, using LLM personas to generate diverse and, possibly, contrasting perspectives. A user begins by entering a topic of interest, similar in spirit to a traditional search query. The system then generates suggested personas to provide broad coverage of differing views. The user can freely keep these generated personas, revise them, or add others. Following this, the user initiates as many rounds of debate as they find valuable, further revising the set of personas at any time to explore additional perspectives. By simulating a debate between personas, each advocating for a distinct perspective, users are exposed to a rich array of arguments, counterarguments, and the complex interplay of ideas that characterize real-world discussions.

Overview of the Multi-Persona Debate System

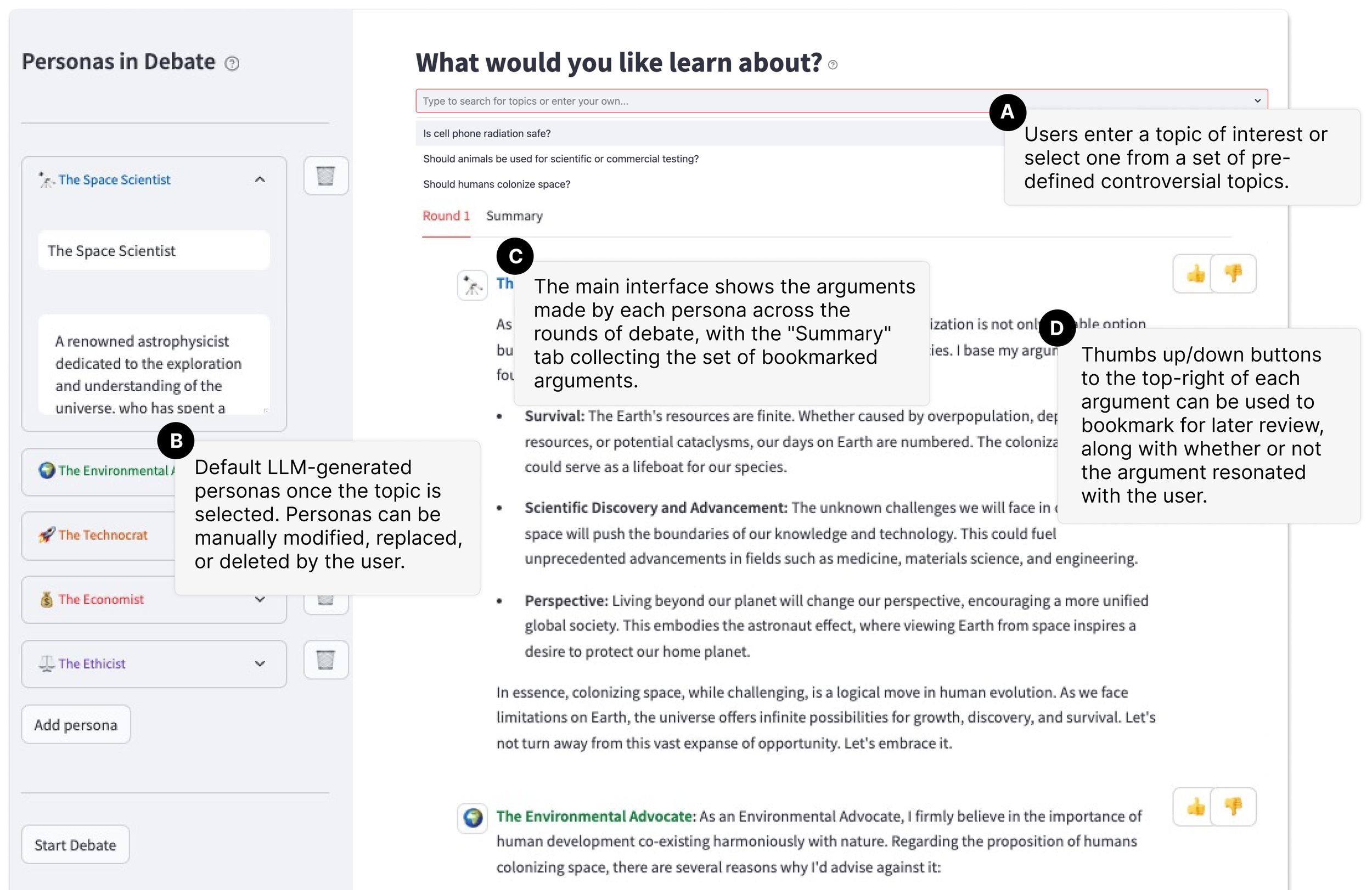

Figure below presents the user interface of our LLM debate system. Major interactive features includes:

Controversial Topics Entry or Selection. At the start, users can either enter a topic, possibly controversial, in the search bar or choose one from the list of suggested topics [A] . The system then begins generating LLM-personas relevant to that topic.

Persona Creation. As displayed in B , each persona is represented by a title and description, along with automatically assigned colors and icons to help users easily identify and differentiate between them (which emerged as a need during early pilot testing). We set a predefined number of personas at the beginning (N =5), but users are free to generate or manually create as many personas as they want. The specific prompt instructions aim to generate diverse LLM personas that represent a range of viewpoints and social roles on the selected topic.

Interactive Debate Flow. During the design of this interface, we addressed a critical design consideration regarding the optimal level of inter-persona interaction within each debate round.

In a structured human debate where debaters are separated into two teams holding opposing views, responses may either focus on arguments presented by a specific debater or address all previously discussed points made by members from the opposing team. Additionally, arguments may unfold sequentially under the guidance of a moderator or occur simultaneously, with debaters from different teams engaging back-and-forth in animated exchanges. To foster dynamic debate interaction while preventing the user from being overload, we have the personas engage in a round-robin (sequential) discussion. The debated content is displayed in a traditional conversational format [C], making it easier for users to follow the debate. The prompt instructions encourage each persona to sequentially formulate their arguments by referring to the entire debate history.

A debate round concludes once all personas have (in order) presented their arguments. Afterward, users can select, deselect, add, or edit personas, and start a new round of debate by clicking the “Start Debate” button. Each time an additional round of debate is initiated, a new tab is created in the interface. This enables users to navigate back-and-forth across different rounds of the debate in reviewing the arguments presented. This could foster user engagement and interactivity.

Bookmarks. With multiple personas presenting arguments over several rounds of debate, the number of arguments can quickly grow, which risks overwhelming the user. To help keep the set of arguments presented more manageable, we introduce bookmarking in our interface D , allowing the user to save arguments of particular note for later review. By clicking up/down thumb icons, users can further record whether or not a given argument resonated with their own thinking. These user bookmarks are collected and shown in the main interface’s ‘Summary’ tab, to the right of the latest debate round, as displayed in C . Users can review their bookmarked content at any time during or after the debate.

UX Research

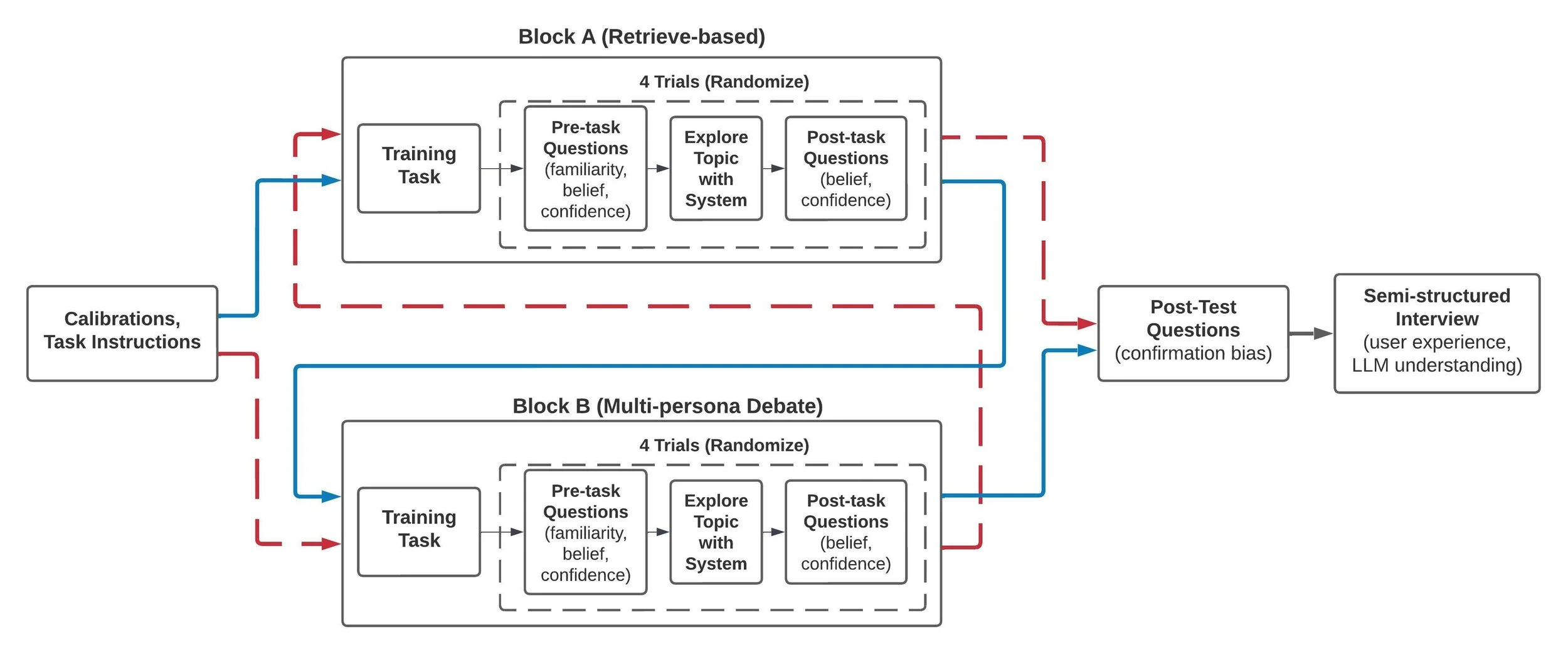

To explore how users might engage with the multi-persona debate system and the system effectiveness of reducing confirmation bias, we conducted a controlled, within-subjects study by comparing the multi-persona debate system with a traditional retrieval-based system.

As illustrated in figure below, the within-subject experiment started with eye calibration and task instructions. Participants were then randomly assigned to one of two study blocks, Block A or Block B. In Block A, participants utilized the baseline system to engage with the topic. We adopted ArgumentSearch(https://www.argumentsearch.com/) as the baseline for our A/B experiments. ArgumentSearch is a retrieval-based search engine that identifies articles from the open web and organizes content snippets according to their stance on the user query.

In Block B, participants interacted with our multi-persona debate system as the experimental condition to explore and generate debate content with the given topic. Each block included a training task to first familiarize participants with the assigned system. Within each block, participants completed four randomized trials assigned with different controversial topics. Once they completed the trials in one block, they moved on to the other block and used the alternate system, ensuring they interacted both systems.

Flowchart of the experimental procedure.

The study was conducted in a usability lab at a university, with 𝑁 = 40 participants (19 females, 18 males, and 3 non-binary identity). Particpants were recruited using convenience sampling, and were aged between 18 and 34 years. They came from a variety of academic disciplines, ranging from applied sciences, natural sciences, engineering, to social sciences, bringing diverse educational backgrounds. Participants were pre-screened for native-level English familiarity, 20/20 vision (uncorrected or corrected). Upon completion of the study, each participant was compensated.

Data collection during the experimental procedure incorporated multiple sources: pre-task questions, user interaction logs, post-task questions, system evaluation questionnaires, and interview recordings. Eye-tracking data were gathered using the Tobii TX-300 eye-tracker and processed through Tobii Pro Lab 8, a commercial usability and eye-tracking software that enabled raw gaze data collection, user interaction recording, and preliminary data cleaning for subsequent analysis.

RQ1: User Experience in Conceptualizing Controversial Topics through Multi-Persona Debate

From the behavioral data collected in the debate system, we applied K-means clustering to categorize participant behaviors while they used the debate system. Recognizing that participants might display different usage patterns across various topics, we treated each participant’s interaction with each topic in the system as a separate unit of analysis. We characterize a usage pattern for each group as follows:

Active Observers: Participants in this group frequently used thumbs up / down features to express their opinions while observing the debate, and often reviewed the bookmarked content. They typically engaged in more than two debate rounds and showed moderate involvement in selecting and editing personas, balancing supportive and opposing viewpoints.

Multi-round Explorers: Participants in this group were inclined to generate more rounds of debate (up to five) but spend less time reading and digesting arguments presented. Notably, we observed a preference for selecting personas that agreed with the given topic. From qualitative feedback, it appears that this may stem from the uncertainty about the topic.

Critical Readers: Participants in this group exhibited the highest level of engagement with the system. Compared to other two groups, they experienced the longest debate durations, selected more new personas, and frequently edited these personas. A notable characteristic was to actively seek out personas that disagreed with the topic. These behaviors suggest significant mental effort invested in generating, evaluating, refining personas, and reading content to gain a comprehensive understanding of the topic.

K-means clustering for interactive behavior in multi-persona debate system. Each column represents a cluster identified by

the K-means algorithm, while each column corresponds to users’ interactions used for clustering. Values reflect the Z-scores of user

behavioral measures across three behavioral groups. The color intensity reflects the magnitude of user engagement with each feature,

with dark orange indicating higher values and lighter shades representing medium to low engagement.

RQ2: Comparison of User Engagement between Retrieval-based and Multi-persona Debate System

We analyzed participants’ eye movements within attitude-consistent vs. inconsistent AOIs to understand how they engaged with the two systems. The statistic results show that there is a clear difference on whether participants reading content consistent or inconsistent with their attitude when using the debate system, as reflected in their eye movements, but no difference found in the baseline. While topic familiarity yields no difference in both systems, confirmation bias tendency resulted in significant difference on the baseline but not the debate system. In combined with the system comparison results shown in the paired t-test, we can conclude that our debate system effectively reduces selective exposure and increases user engagement with attitude-inconsistent content, while the baseline system activates confirmation bias tendencies, as evidenced by distinct eye movement patterns.

Comparison of total fixation duration on attitude-consistent and inconsistent information, displayed in box plots, between baseline and debate systems, analyzed across varying levels of topic familiarity and confirmation bias tendency levels.

Additionally, the eye-tracking results suggest that there is significant difference in the eye movements between behavior clusters within the debate system, as content-belief consistency did not significantly influence fixation counts, this suggests that user engagement levels were more closely tied to their behavior patterns when using the debate system rather than whether participants would read content associated with their prior beliefs.

Comparison of normalized fixation duration and fixation counts on attitude-consistent and inconsistent information across

user behavior groups. Critical Readers had significantly higher fixation counts compared to the other groups, this suggests that

Critical Readers were more visually engaged with the content. By associating with qualitative results, describing how

Critical Readers actively engaged with various personas and generated content, we can conclude that

certain behavioral pattern, i.e., Critical Readers, show a clear sign of engagement demonstrated in their eye movement

data.

RQ3: Comparison of User Belief Changes between Retrieval-based and Multi-persona Debate System

The results showed that while the system conditions did not yield a statistically significant difference on participant non-directional belief changes (i.e., whether participant belief changed or remained unchanged), for directional belief changes, there was a clear distinction. The debate system was more likely to cause participants to become less supportive of their initial stance, whereas the baseline tended to reinforce prior beliefs, making them more supportive. Additionally, user belief changes exhibited a statistically significant increase as topic familiarity decreased. While a statistically significant difference was also observed with confirmation bias tendencies, the trend did not fully align with our hypothesis. This discrepancy may be due to the three-level classification of bias tendency scores, indicating a need for further investigation in future studies.